The AI Safety Index 2025: A Wake-Up Call for Cybersecurity and Privacy in the Age of Advanced AI

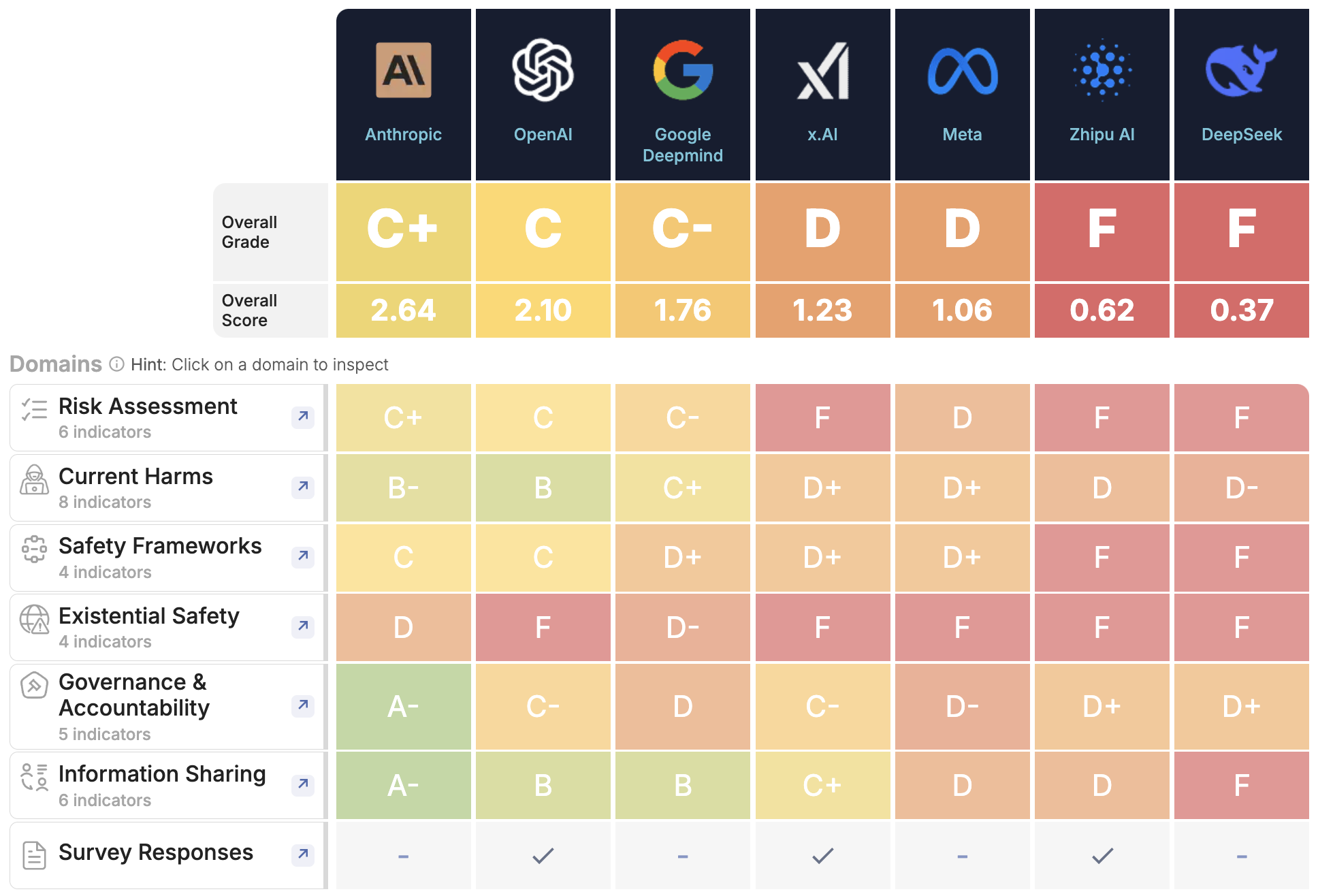

In the rapidly evolving landscape of artificial intelligence (AI), the AI Safety Index – Summer 2025, published by the Future of Life Institute, offers a comprehensive assessment of the industry’s preparedness to tackle the potential risks associated with advanced AI systems. This landmark study evaluates leading AI companies across six critical safety domains, highlighting concerning gaps in risk assessments, privacy protection, and governance frameworks.

As AI technologies progress at an unprecedented pace, the study’s findings underscore the urgent need for robust safety frameworks to mitigate risks and ensure privacy protection. With many AI companies falling short in key areas, this report serves as a stark reminder of the need for stronger safeguards, particularly as AI continues to play a larger role in our personal and professional lives.

Key Findings of the AI Safety Index 2025

The AI Safety Index ranks seven leading AI companies on various criteria, including risk assessments, safety frameworks, existential safety, and governance and accountability. The study reveals that no company achieved a top grade, with most receiving scores that indicate significant vulnerabilities in addressing both immediate and long-term risks. Among the key findings:

- Risk Assessments and Safety Frameworks: The companies assessed performed poorly in creating and implementing effective safety frameworks, with none of them adequately addressing the potential privacy and security risks associated with AI. These frameworks are vital in managing the vulnerabilities that can arise from AI systems, especially those handling sensitive personal data.

- Governance and Accountability: Despite advancements in AI, the companies assessed fell short in establishing clear governance structures to manage and monitor their AI technologies. The lack of transparent accountability mechanisms makes it challenging to ensure that AI systems operate within the boundaries of ethical standards and privacy regulations.

- Privacy and Data Protection: With AI technologies often relying on vast datasets, many of which contain personal information, the potential for privacy breaches is significant. The study reveals that many AI firms are not implementing adequate privacy safeguards, making personal and sensitive data vulnerable to misuse or unauthorized access. As AI systems become more integrated into everyday life, the need for strong privacy and protections becomes increasingly urgent.

The Intersection of AI Safety and Cybersecurity

The AI Safety Index 2025 provides a crucial insight into the risks that AI poses to both privacy and cybersecurity. As AI continues to evolve, so too does the complexity of the cybersecurity threats it creates. The study’s findings highlight a critical challenge: as AI companies push the boundaries of innovation, they must also prioritize the development of cybersecurity measures that protect personal data, prevent unauthorized access, and ensure the integrity of AI systems.

In this context, cybersecurity becomes not just a technological concern but a fundamental component of responsible AI development. The integration of AI into sectors such as healthcare, finance, and education — where personal and sensitive data is regularly handled — underscores the importance of privacy protection. The failure to secure AI systems against breaches, data theft, or manipulation can have devastating consequences for individuals and society at large.

What Needs to Be Done?

The AI Safety Index study makes it clear that AI companies must prioritize security and privacy by integrating comprehensive safety frameworks and governance structures. Key steps include:

- Developing Stronger Risk Assessment Protocols: AI companies must establish more robust risk management frameworks to evaluate the potential privacy and cybersecurity risks of their systems. This includes conducting regular assessments of AI system vulnerabilities and implementing measures to mitigate these risks before deployment.

- Strengthening Privacy Protections: As AI systems process vast amounts of personal data, companies must implement data protection policies that comply with global privacy regulations, such as the GDPR. This involves securing user data, ensuring transparency in how data is used, and giving individuals control over their personal information.

- Implementing Transparent Governance and Accountability: Clear governance structures and accountability mechanisms are essential in ensuring that AI systems operate ethically and securely. These systems should include oversight bodies, auditing mechanisms, and clear reporting channels to hold AI developers accountable for any security or privacy breaches.

- Preparing for Existential Safety Risks: As AI systems become more complex, companies need to develop contingency plans and safety protocols to address potential existential risks. Ensuring the controllability of AI technologies and protecting against potential harm is crucial for long-term safety and privacy.

Conclusion: A Call for Action

The AI Safety Index 2025 serves as a wake-up call for AI companies, policymakers, and cybersecurity professionals alike. While AI promises significant advancements, it also presents profound risks, particularly in the realms of privacy and cybersecurity. The industry’s inability to adequately address these risks highlights the pressing need for stronger safety measures, more comprehensive privacy protections, and more transparent governance.

As AI technologies continue to shape our future, it is imperative that they are developed with the highest standards of cybersecurity and privacy in mind. Without these safeguards, we risk compromising the very trust that underpins the digital ecosystems we rely on every day. The responsibility falls on all of us — from developers to users — to ensure that AI is harnessed for the greater good while keeping privacy and security at the forefront of its evolution.

Read the full report or two-page summary here: https://futureoflife.org/ai-safety-index-summer-2025/